A robot with a gentle touch

In order to support people in therapy or in everyday life in the future, machines will have to be capable of feeling and gently touching their human counterparts. Katherine J. Kuchenbecker and her team at the Max Planck Institute for Intelligent Systems in Stuttgart are currently developing the technology required for this objective and are already testing sensitive robots for initial applications.

Text: Andreas Knebl

Warm, secure, safe. That’s how a hug should feel. And that’s how it actually feels when HuggieBot’s strong arms close around you and you’re pressed against its warm, broad chest. The flagship robot from the Haptic Intelligence Department at the Max Planck Institute for Intelligent Systems in Stuttgart effortlessly embraces its human counterpart. Getting Huggie Bot to this point, however, has been a long journey for Katherine J. Kuchenbecker and her team. Teaching machines to feel, that is, translating the sense of touch into technology, is challenging, and this research field is still new. Kuchenbecker’s goal is to better understand haptic interactions and thus improve the way in which humans and machines interact with each other and with the objects around them. Here, haptic interactions refer to contacts with robots that involve tactile perception. “Ultimately, I want to create interactive robotic systems that can really help people,” Kuchenbecker says. She is particularly focused on haptic applications for robots that provide physical or social support to people in need.

Controlled hugging

HuggieBot enables the researchers to study such tactile human-robot interactions. Taking the hug as an example, the researchers can test which conditions a robot has to fulfill so that people enjoy physically interacting with it. While robotic nurses will have to deal with other, often more complex and intimate physical interactions, HuggieBot and its hugs are harmless. Nevertheless, a hug requires the robot to have a lot of sensitivity. As hugs are pleasant for most people, it’s not difficult to recruit study participants to test the robot.

Alexis E. Block, the lead researcher on the project, and Kuchenbecker have set themselves the goal of making HuggieBot’s hugs feel just as reassuring, consoling, and comforting as a hug from a human. In the future, haptically intelligent robots could help close the gap between the virtual and physical worlds. After all, an increasing number of our social encounters take place in the virtual realm. Robots with a tactile sense could allow people who are physically far apart to literally get in touch with each other.

In order to develop HuggieBot, Kuchenbecker and Block first tested which physical properties a robot should have, so that people experience its hugs as natural and pleasant. They found it should be soft, warm, and about the same size as a human. It should also visually perceive the person interacting with it and haptically adapt its hug to the person’s size and posture. Finally, the robot must recognize when to end the hug.

Since then, the researchers have developed different versions of HuggieBot, such as HuggieBot 2.0, which they designed from scratch after first having worked with a slightly modified and reprogrammed commercial robot. HuggieBot 2.0 consists of a central frame, a torso that inflates like a beach ball, two industrial robot arms, and a computer screen as a head. It is dressed in a grey sweatshirt and a long purple skirt. When it recognizes a person in its environment with the help of the camera located above its face screen, it asks: “Can I have a hug, please?” and a friendly face appears on its screen. If the person then approaches, HuggieBot prepares for a hug and assesses the person’s size.

As soon as the person is within arm’s reach, it closes its arms and presses them against its chest, which is soft and warm, because the robot’s specially developed chest is inflated with air and equipped with a heating pad. Sensors and a control system for the arms ensure that the pressure with which HuggieBot embraces the person corresponds to a warm, tight hug. The robot also senses if the person returns the hug through a pressure sensor inside the rear chamber of the inflated chest.

When the person releases the pressure or leans slightly back against the chest of HuggieBot to end the hug, the robot opens its arms. Therefore, there is no unpleasant feeling of an involuntarily long hug and the interaction with the robot is perceived as being safe. Several studies with human participants have demonstrated how well HuggieBot’s haptic elements and control system work. In one study, the participants each exchanged eight hugs with the robot, with different functions switched on or off. The results of the subsequent surveys clearly showed that haptic perception plays a major role: when the robot adapts to their size and reactively releases, the participants found the hugs more pleasant and rated the interaction more positively.

In addition to HuggieBot, Kuchenbecker’s team is working on numerous projects that require a sense of touch. For example, her team members are researching technical ways to perceive and transmit touch and are working on a teleoperated construction robot, as well as on a robotic hand that grasps objects and classifies them based on their tactile properties. The team is furthermore developing other robots that are based on commercial platforms, but feature additional haptic capabilities to assist humans: Hera, which is designed to support therapy for children with autism, and Max, which is intended to exercise with older adults or patients undergoing rehabilitation. The wide-ranging research in Kuchenbecker’s Department has its reasons: from her days as a varsity volleyball player at Stanford University, Kuchenbecker has internalized that success is a team effort that benefits from diverse skillsets and perspectives. That’s where she gets both her motivational leadership style and her willingness to allow researchers in her Department to pursue their own ideas. The success of this approach can also be measured by the fact that many of Kuchenbecker’s team members go on to land coveted fellowships or academic positions.

The focus of Kuchenbecker’s research is also influenced by her family background. As the daughter of a surgeon and a psychologist, she chose to study mechanical engineering to bring technology and human care together. In her doctoral thesis at Stanford University, for example, Kuchenbecker looked at how teleoperated surgical robots could be improved by adding haptic feedback to their previously purely visual controls. Since then, she has developed much broader research questions: how can tactile information be technically translated and reproduced? And how can this additional sensory information help improve the interplay between humans and technology?

Until now, there has been a significant imbalance in engineering for the human senses. While humans have long been able to record and reproduce audio-visual information, and with ever increasing finesse, comparable technology for our sense of touch is missing. For haptic stimuli, there is still no equivalent to a camera or a microphone, nor to a screen or a loudspeaker. Consequently, computers and even smartphones with touch screens are still unable to allow us to physically feel digital objects. Similarly, most machines, and thus robots, are clumsy when it comes to touching objects or people. This is because, apart from cameras and simple force sensors, there is as yet no commercial piece of technology available to robots that allows them to gather information about their physical interactions. Thus, it is difficult for a robot to determine whether an object is hard and smooth or soft and rough, for example. In the same way, it is virtually impossible for a conventional robot to determine whether and, more importantly, how a human is touching it. Yet it is precisely this physical interaction between humans and robots that is crucial for many applications.

Supporting therapy

For that reason, the Hera project brings together several research strands from the Haptic Intelligence Department. Hera stands for Haptic Empathetic Robot Animal; Rachael Bevill Burns, the doctoral student leading the project, is developing it as an educational tool for children with autism. These children often have problems dealing with social touch. Usually, humans use social touch to request attention, communicate a need, or express a feeling. But many children with autism either reject touch or, at the other extreme, seek touch but have no sense for which contact is appropriate.

Thus, they may touch another person too forcefully, too frequently, or in the wrong places. Currently, children with autism commonly learn how to safely handle touch from an occupational therapist. Burns and Kuchenbecker believe that haptically intelligent robots like Hera can expand the current possibilities for therapy and care. Recently, the team interviewed numerous experienced therapists and caregivers to learn what contribution such robots could make and what characteristics they should have.

Based on the results of those interviews, the Max Planck researchers are now developing Hera, which is based on the small humanoid robot Nao. For its role as a therapy robot animal, they dressed it in a koala costume. Underneath its furry exterior, Hera will wear tactile sensors all over its body to record the physical interaction between the child and the robot. As confirmed by the experienced therapists, this application requires sensors that are soft and can reliably detect both gentle and firm touches all over the robot’s many curved surfaces. However, such sensors are not commercially available. Kuchenbecker’s team has therefore been developing fabric-based sensor modules that fulfill these requirements.

A single module consists of several layers of fabric with high and low electrical conductivity. When the sensor module is touched, the layers are pressed together and the electrical resistance between the two outer layers drops. Depending on how often, by how much, and at what frequency the resistance drops, an algorithm classifies the touches as, for example, poking, tickling, hitting, or squeezing.

“While commercial robots often feature force sensors only in the wrists, Hera will be able to sense and respond to touches all over its body thanks to our fabric-based sensors,” says Kuchenbecker. This good sense of touch will allow Hera to determine whether the child’s social touches are appropriate, for example, whether the child is gripping the robot too hard or touching it in a location most people would consider inappropriate, such as the face. The robot will respond to such a transgression just as defensively or disapprovingly as a human or an animal would, but it would also signal its approval if the child learns to touch it in a more appropriate manner. Burns and Kuchenbecker envision that a therapist could use a robot like Hera to help children safely learn how to touch others.

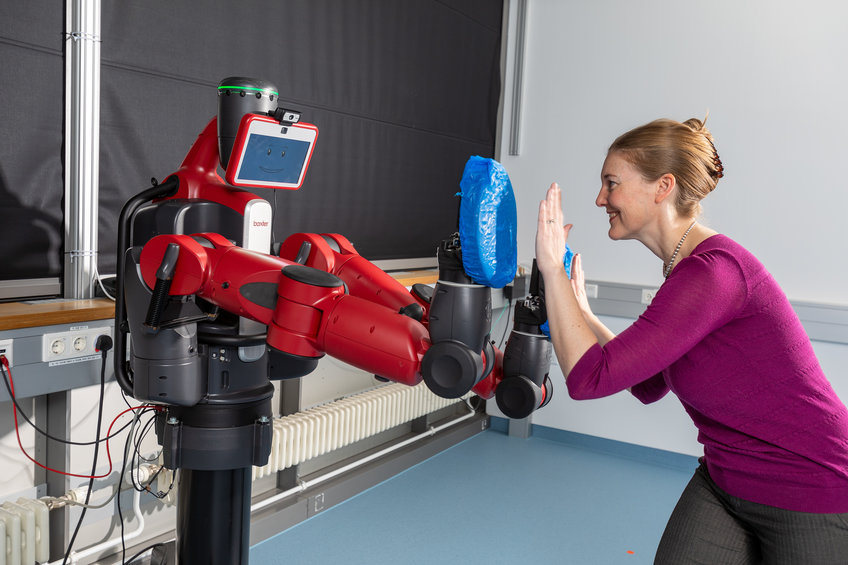

Another interactive robot system could also be used as a training aid, this time for older people or for patients in rehabilitation, e.g. following an operation or a stroke. Max, the Motivational Assistive eXercise coach, tirelessly plays lightweight exercise games with its human partner. It is based on Baxter, a robot modeled after a human upper-body that was originally designed for the manufacturing industry. One game requires repeatedly patting the robot’s hands to wake it up. Other games require the human players to remember a long pattern of hand claps, stretch their arms out wide, or hold yoga-like poses as demonstrated by Max.

Unlike HuggieBot and Hera, Max has no custom hardware, so its haptic perception is limited to the accelerometers that are built into its two grippers. Kuchenbecker’s previous doctoral student, Naomi Fitter, had the idea of covering those grippers with soft boxing pads, and she programmed the robot to be highly sensitive to the contacts that occur during hand-clapping games. Even this simple form of tactile sensing positively impressed both younger and older adults who came into the laboratory to test Max as part of a study. One of the things they were asked to do was to evaluate the games in which they touched the robot and those in which they only trained with it at a distance. The researchers discovered that the participants found the games that involved touching much more entertaining.

The robots with a sense of touch from Kuchenbecker’s lab are a good example of the progress that haptics has made and the possibilities that this technology offers for the interaction between humans and machines. When it comes to the digitalization of social and health-related services, however, questions of data protection and ethics arise alongside questions of technical feasibility. Hera, for example, could collect countless data about the behavior of its human partners while acting as a companion for children with autism. To whom should this data be accessible? To the parents, the therapist, the health insurance company? And will the child be informed that its new friend is sharing all this data? Similar questions also arise in the case of nursing robots. The German Ethics Council has taken a clear stance here and recommends that the use of robotics should be guided by the goals of good care and assistance. The individuality of the person needing care must be respected and, for example, special attention should be paid to self-determination and privacy.

In addition, it is important that robots do not replace human care and thereby further reduce social contacts and human interactions. Rather, the robot should only be used as a supplement for the benefit of both the caregiver and the care recipient. In all of these considerations, it is also important to take the costs into account and weigh alternatives. After all, robots that treat people sensitively will be expensive – at least initially – and will be able to perform only limited tasks. For Kuchenbecker, it is nevertheless clear that such systems have the potential to help people, since they inhabit our physical world and can interact with us in highly familiar ways. Her research is currently pushing the boundaries of what is possible by developing more and more intelligent haptic systems. Everything else we will have to negotiate as a society.

Researchers at the Max Planck Institute for Intelligent Systems are investigating the requirements robots would have to fulfill for people to experience their touch as pleasant and helpful in therapeutic or social interactions. HuggieBot, a robot that hugs people, is one of the systems the researchers use to do this.

In addition to HuggieBot, the researchers are developing and optimizing other haptic technical systems on the basis of relevant studies: Hera, for example, can be used to help teach autistic children to touch people in an appropriate manner. Max can provide training support for older adults or patients in rehabilitation.

The use of robots for therapeutic or social tasks raises ethical and data protection issues. It is not for scientists to decide the purposes for which they will ultimately be used.